Group By, With Lambda Syntax

Last week, we explored Group By using query syntax.

I also provided a hint as to what the Group By will look like when formulated using Lambda Expressions.

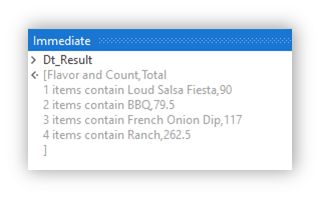

I have modified the test case a little, because If I were to merely pull out the Key and Count, that would bore me to death.

We will perform three different operations, with the last operation being a little more realistic.

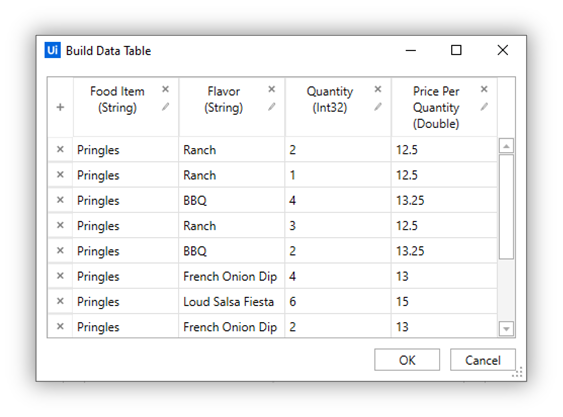

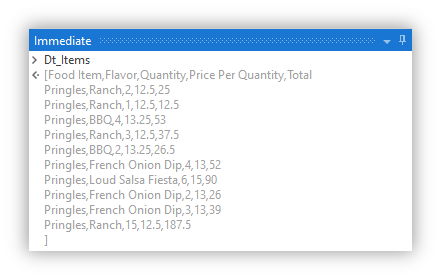

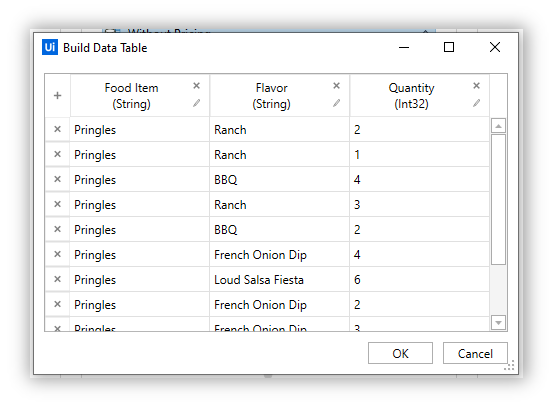

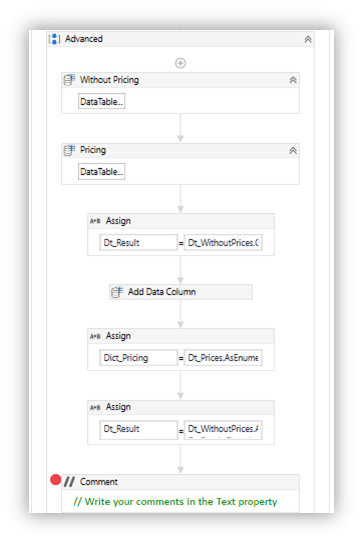

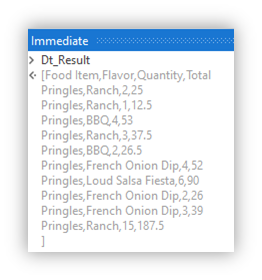

Here is the modified test data:

Bring it back!

First, let’s bore ourselves with a lambda syntax that calculates the Total and adds it back to our original DataTable.

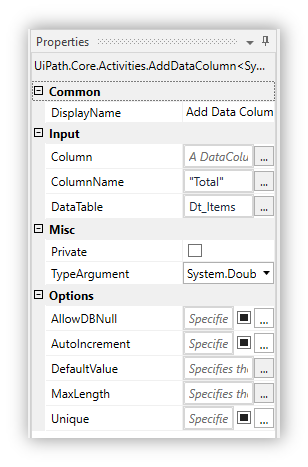

But before that, we have to add a column to our DataTable, so that our Totals will have a place to call home.

Why did I drag in an Add Column Activity instead of using LINQ? Because KISS is important.

I had come across a LINQ which enables us to add DataColumns to a DataTable, but I wasn’t able to break it down properly. I might explore it later and pen an article on that, but for today let’s Keep It Simple Silly.

Now that our Dt_Items has opened up a new apartment for Totals, let’s start populating it with Tenants!

Lambda Espresso

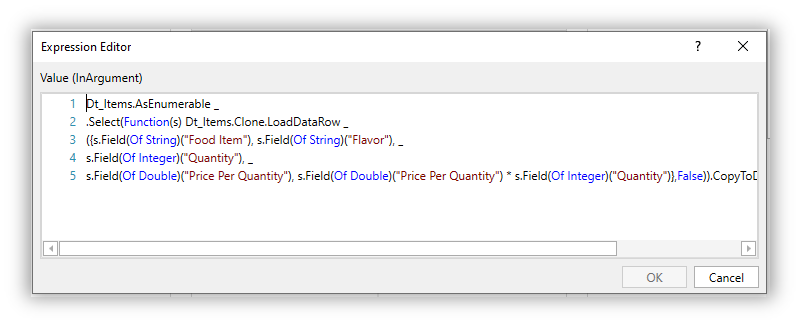

As always, we have to bring out our cutting board and chop out DataTable into an IEnumerable of DataRows.

Dt_Items.AsEnumerableWhat comes next?

Remember, we are only filling the Total Column with data. Grouping isn’t required here, which means we can directly pool the result.

But what will the result be?

Notice, that there is a column which contains the quantities per item, and another column with its individual pricing.

We simply have to find the product between the two and place it into our Total Column.

To do that, we can directly generate the DataRow and add the necessary row items using a Select Clause.

I’ll perform it in steps, so that you too can follow along.

Dt.AsEnumerable.Select(Function(s) Dt_Items.Clone)Dt.AsEnumerable.Select(Function(s) Dt_Items.Clone.LoadDataRow)Lets zoom into the Select Function.

Select(Function(s) Dt_Items.Clone _

.LoadDataRow({s(0),s(1),s(2),(s(1)*s(2))}, False)Don’t worry about the False at the end. It tells the complier that we aren’t imparting changes to an existing data row, but are interested in creating an entirely new set of data rows.

Like I said, don’t worry about it. It’s always set to false. Don’t fact check that though.

This is the entire solution:

Dt.AsEnumerable _

.Select(Function(s) Dt_Items.Clone _

.LoadDataRow({s(0),s(1),s(2),(s(1)*s(2))}, False)

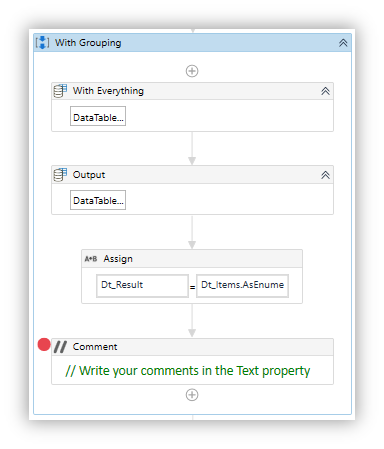

Now For Some Grouping Action

The previous example was pretty straightforward wasn’t it?

Let’s spice things up a little, and retrieve the Prices corresponding to each flavour, along the number of Items per flavour.

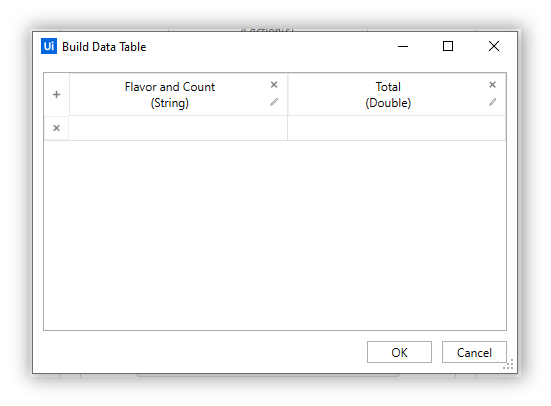

We will have to build the Result DataTable since the Columns will be different from the source(Dt_Items).

For this, we will have to divide them into groups, but before that I want you to think about which column we ought to group the entire collection by.

.

.

.

.

.

Yes!

You guessed it right, we have to group it by the Flavor Column.

Grouping it by the Price Per Quantity Column also divides the collection appropriately, but the Key would reference the Price Per Quantity Items, and not the Flavor.

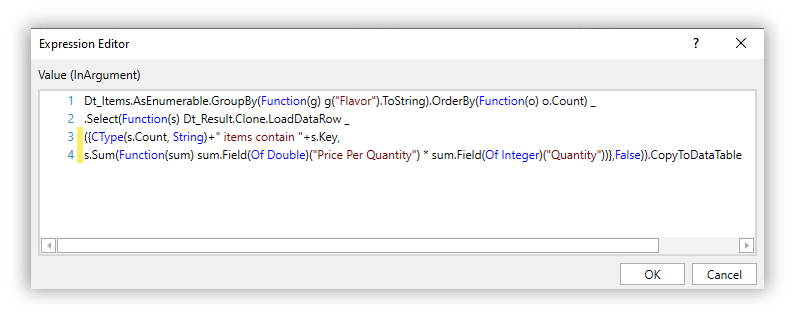

Dt_Items.AsEnumerable _

.GroupBy(Function(g) g.Field(Of String)(“Flavor”))This is the first step.

Can you guess what comes after this?

Yes, we have to load the data rows and pool the data accordingly.

Dt_Items.AsEnumerable _

.GroupBy(Function(g) g.Field(Of String)(“Flavor”)).Select(Dt_Result.Clone _

.LoadDataRow({s(1)+“ contains ”+s(0).Count+ “ items” ,s(1)*s(2)}

,False)).CopyToDataTableNOPE.

That won’t work.

Remember, we have grouped the collection, so this will not work.

We can only reference it using the Group Attributes.

Dt_Items.AsEnumerable.GroupBy(Function(g) g.Field(Of String)(“Flavor”)).Select(Dt_Result.Clone

({s.Key+“ contains ”+s.Count.ToString+“ items”,

s.Sum(Function(sum) sum.Field(Of Integer)(“Quantity”)*sum.Field(Of Double)(“Price Per Quantity”)},False).CopytoDataTableThe key contains the Flavor, the Count is pretty self-explanatory, and the s.LINQ is pretty non-self-explanatory.

Diving Into The Non-Explanatory

Our reference “s” isn’t holding a single item. The Lambda function is iterating through each group coming out of our previous Method which grouped the collection of data rows into sub collections.

It holds the group pertaining to each Flavor. We have to sum all item present in each group, which is why LINQ made a guest appearance here.

If two Pringles are BBQ flavoured, and costs $13 a piece, then the sum total will amount to $26.

Yes, its daylight robbery, but we only found out about it thanks to detective LINQ.

Onto The Final Example

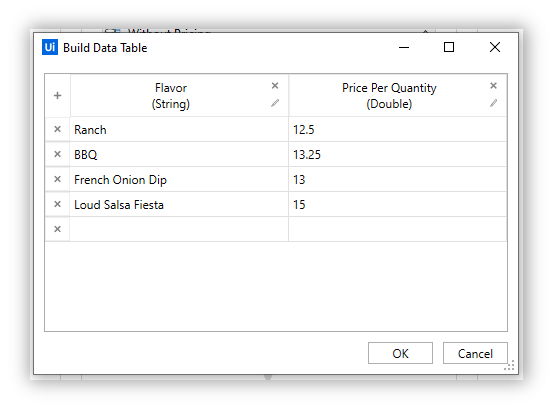

There are times when the data is present in different sources and in different formats.

We won’t dive into any complexities, let’s stick with what we are familiar with for today.

Imagine you had two data tables:

What would the approach be?

I have explored one such example in an earlier post.

Head over to there, study the example, and see if you can resolve this problem using the same technique.

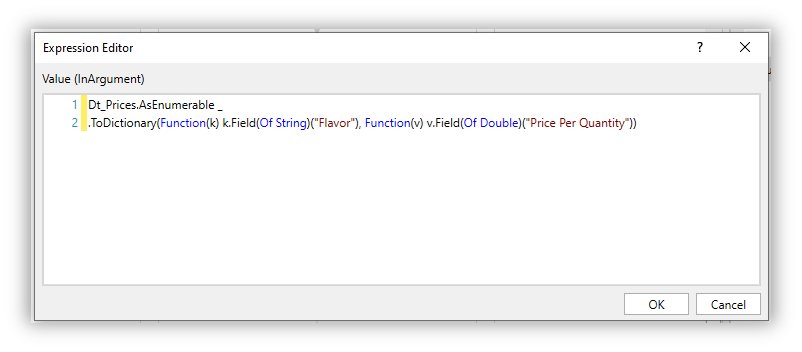

Which DataTable do you think would make a good candidate for conversion?

Think about it for a while, before you proceed.

.

.

.

.

.

Dt_Prices.AsEnumerable.ToDictionary(Function(k) k.Field(Of String)("Flavor"), Function(v) v.Field(Of Double)("Price Per Quantity"))

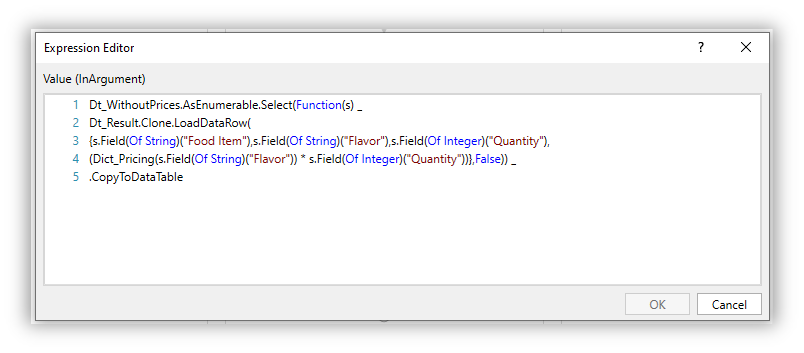

We have our Dictionary ready, and all that is remaining is for us to plug it into the Load Data Row section like so:

Dt_WithoutPrices.AsEnumerable _

.Select(Function(s) Dt_Result.Clone.LoadDataRow({s.Field(Of String)("Food Item"),s.Field(Of String)("Flavor"),s.Field(Of Integer)("Quantity"),(Dict_Pricing(s.Field(Of String)("Flavor")) * s.Field(Of Integer)("Quantity"))},False)).CopyToDataTable

The Dictionary will pull out the Quantity, which is then multiplied with the Price Per Quantity.

I would recommend trying it out on your own.

You can’t absorb information just by reading it – that is done by impressing it upon yourself through practice.

Your brain likes to be stimulated, which is why you spend hours on social media.

Only problem with that, is it is not productive.

If you spend a fraction of the time you do on social media, on what I have written down for you, it will enrich you.